Attack Lab Automation - UPDATED

Introduction

If you recall in this post, I (briefly) went over how I was leveraging technologies such as Terraform and Ansible to deploy and provision my attack lab infrastructure. Some folks reached out looking for all the relevant code and more information so they could try this out for themselves and learn more. My apologies as this update and code publishing is way overdue.

I’ll continue to point out that there are way more robust labs similar to mine. One of which I will never fail to mention is @Centurion’s Detection Lab. Insanely complex but in a good way! AttackLab-Lite is more suited to my needs and is a way to continuously improve upon my Terraform, Ansible, Jenkins, and “DevOps” skill sets. I just like to do projects that help me in my day-to-day and more narrowly fit my particular use cases as well. This is also an excuse to keep on AttackLab-Lite and let it eventually evolve into a more robust lab environment with user simulation. In general, there isn’t much that’s special about AttackLab-Lite at the moment, it’s really just a means to get some VMs up and running with an Elastic Stack so I can do some testing of offensive tooling.

Hopefully this will help some folks out there learn more about Terraform, Packer, Ansible, and the like.

If you’re interested in the Jenkins and GitLab CI/CD approach to this, I’m dedicating a post to it as to not detract from the core content. I swear, this will be posted soon!

So, What Changed?

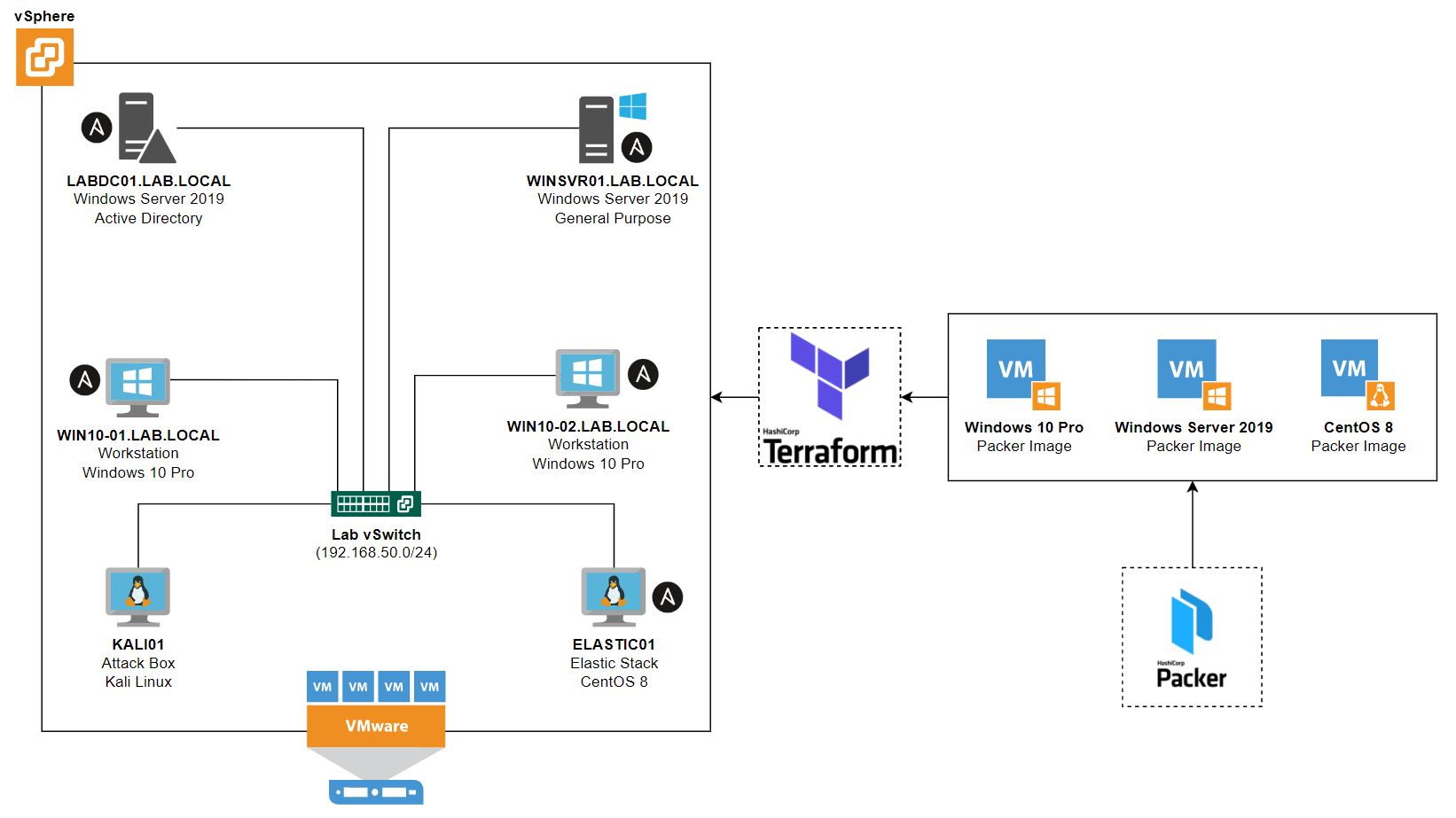

I’ve since made a few enhancements by adding in Packer and Jenkins to automagically build CentOS 8 Stream, Windows Server 2019, and Windows 10 virtual machine images to further automate the deployment of the AttackLab-Lite infrastructure to my local ESXi/vSphere environment.

Compared to the previous post, this will be a lot more technical and be a deep dive into the new process I’ve developed. Remember, this project is a work in progress and I would like to incorporate some interesting finds that I find over time to give the lab more “real world” telemetry to work with, as far as logging and environment behaviors are concerned.

TL;DR

- Published Terraform, Packer, Ansible code to my GitHub

- Enlisted the help of Packer to build baseline images for Windows and Linux

- Cleaned up Ansible code and structure to be more cohesive and clean

- Moved to CentOS 8 Stream for Elastic Stack since technically not EOL

- Added Jenkins and Jenkins Pipelines for building/deploying/provisioning

- Experimented with GitLab’s CI/CD for fun and profit

What does it look like now?

Similar to the diagram in the last post, but I’ll toss in a newer one that includes what the (logical) flow of Packer and Terraform looks like for context.

What makes AttackLab-Lite an attack lab of sorts? At the moment, not too much. But the two key components are:

- Vulnerable-AD script is used to create some AS-REP/Kerberoastable users

- LDAP has been configured to allow NULL bind via PowerShell (Gist)

There’s more to come in the way of making the lab environment more vulnerable to common attacks through simulation, see the Retrospective section - it’s all in progress!

How is this Blog Post Structured?

There’s quite a bit of content in here covering the core of AttackLab-Lite and some optional areas, like Jenkins and GitLab CI/CD which will be covered in a separate blog post.

- Requirements & Assumptions - Requirements specific to AttackLab-Lite and some things to have in place before you get started

- Building Images with Packer - A quick review of Packer and how it’s being used to build baseline virtual machine images

- Windows Answer Files - Review of building answer files (Autounattend XML) from scratch and how they’re used to build images with Packer

- Terraform Code Overview - A quick review of how the Terraform code is structured and use in the lab

- Ansible Playbook Overview - A quick review of how Ansible playbooks are structured and used in the lab

- Installing Terraform, Packer, Ansible - How to install Terraform, Packer, and Ansible on Linux and Windows

- Deploying AttackLab-Lite - Building Packer images, deploying infrastructure with Terraform, and provisioning infrastructure with Ansible

- Post-Deployment Activities - Some tasks to do after deploying the lab, specifically around enrolling Windows 10/Windows 2019 servers into the Elastic Stack

- Retrospective - Things that are on the TODO or are in progress to make this a living project that can and will evolve over time

NOTE: This post is quite extensive and covers the what, why, and how of everything. If you’re interested in getting up and running with the lab as-is, feel free to consult the Wiki on the AttackLab-Lite GitHub page.

ESXi/vSphere Requirements & Assumptions

Attack-Lab Lite was built around ESXi deployment. This post assumes you are using VMware ESXi and vSphere as your base infrastructure for deployment.

Additionally, within vSphere, it is assumed you have configured:

- A Datacenter

- A Cluster

- Have relevant ISO files uploaded to your ESXi host datastore (OS and Windows VMWare Tools)

On that note, you’ll need to extract the Windows VMware Tools from your ESXi host and add them to your datastore so Packer can find them.

- Enable SSH on your ESXi host

- Use an application such as WinSCP to download the

windows.isoVMware Tools ISO from/vmimages/tools-isoimages - Upload the Windows VMware Tools ISO to your ESXi host datastore

Other aspects of Attack-Lab Lite and content in the post don’t strictly apply to ESXi/vSphere, such as the building of Windows Answer files and Ansible playbooks. This can be applied anywhere and to a lot of different use cases.

Packer and Terraform may be specific to ESXi/vSphere in the context of this post, but the code can serve as a primer to use in different applications, like using Packer with VirtualBox and Terraform with DigitalOcean or AWS, for example.

Building Images with Packer

Packer is a tool that allows you, in an automated and unattended fashion, to build virtual machine images of different operating systems for different virtualization hypervisors based on defined requirements. Packer allows you to create VM images for a variety of platforms and environments such as vSphere, AWS, Azure, VirtualBox, Proxmox and more.

During this stage you could install software, but I save that for Ansible. In the event I want to add or remove software down the road, it’s much easier and time effective to change the Ansible code than to build new images and go through the build, deploy, and provision pipelines all over again.

While building images for the Windows systems of the lab are relatively easy, as is also the case with CentOS 7/8 (or anything RHEL, really), it’s a little more tricky with Ubuntu. Ultimately, until I have the patience to figure out the Packer process for Ubuntu deployments, CentOS 8 Stream will be my go to for the Linux-based aspects of the attack lab.

Windows 10 / Windows Server 2019

For both the Windows 10 and Windows Server 2019 Packer build processes, there are a few components that make it possible:

- Packer Template File

- Packer Variables File

- vSphere Variables File

- Windows Answer Files (

autounattend.xml) - Different PowerShell and Batch Scripts

Let’s break down the Windows 10 Packer template (win10.pkr.hcl); the structure is generally the same for the Windows Server 2019 and CentOS 8 Packer builds as well, but we’ll use the Windows 10 template as an example.

First, we’re defining the required Packer plugins for when we do our packer init later on, which tells Packer “Hey, these are the plugins I need to build out this image, go download them!” In this instance, we only have one which is for the vSphere Builder so Packer can interact with vSphere via API to build a virtual machine that will eventually become a template within vSphere itself.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

#################

# PACKER PLUGINS

#################

packer {

required_version = ">= 1.8.3"

required_plugins {

vmware = {

version = ">= 1.0.8"

source = "github.com/hashicorp/vsphere"

}

}

}

Next, we define some variables for both the virtual machine itself as well as vSphere. These are intentionally blank as the real values are stored in win10.pkrvars.hcl and vsphere.pkrvars.hcl that Packer will use later to populate the relevant values.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

####################

# VSPHERE VARIABLES

####################

variable "vsphere_ip" {

type = string

description = "vSphere Server IP Address or Hostname"

default = ""

}

variable "vsphere_username" {

type = string

description = "vSphere Username"

default = ""

}

variable "vsphere_password" {

type = string

description = "vSphere Password"

default = ""

sensitive = true

}

variable "vsphere_dc" {

type = string

description = "Datacenter in vSphere Instance"

default = ""

}

variable "vsphere_datastore" {

type = string

description = "Datastore to Reference"

default = ""

}

variable "vsphere_cluster" {

type = string

description = "Cluster in vSphere Instance"

default = ""

}

variable "vsphere_folder" {

type = string

description = "Destination Folder to Store Template"

default = ""

}

variable "vcenter_host" {

type = string

description = "ESXi Host where VM will be created"

default = ""

}

###############

# VM VARIABLES

###############

variable "vm_name" {

type = string

description = "Name of VM Template"

default = ""

}

variable "vm_guestos" {

type = string

description = "VM Guest OS Type"

default = ""

}

variable "vm_firmware" {

type = string

description = "Firmware type for the VM; i.e.: 'efi' or 'bios'"

default = ""

}

variable "vm_cpus" {

type = number

description = "Number of vCPU's for VM"

}

variable "vm_cpucores" {

type = number

description = "Number of vCPU Cores for VM"

}

variable "vm_ram" {

type = number

description = "Amount of RAM for VM"

}

variable "vm_cdrom_type" {

type = string

description = "CDROM Type for VM"

default = ""

}

variable "vm_disk_controller" {

type = list(string)

description = "VM Disk Controller"

}

variable "vm_disk_size" {

type = number

description = "Desired Disk Size for VM"

}

variable "vm_network" {

type = string

description = "Desired Virtual Network to Connect VM To"

default = ""

}

variable "vm_nic" {

type = string

description = "VM Network Interface Card Type"

default = ""

}

variable "builder_username" {

type = string

description = "VM Guest Username to Build With"

default = ""

}

variable "builder_password" {

type = string

description = "VM Guest User's Password to Authenticate With"

default = ""

}

variable "os_iso_path" {

type = string

description = "Path to ISO on Host's Datastore"

default = ""

}

variable "os_iso_url" {

type = string

description = "URL to ISO File for Packer to Download"

default = ""

}

variable "os_iso_checksum" {

type = string

description = "SHA256 Checksum of the Target ISO File"

default = ""

}

variable "vmtools_iso_path" {

type = string

description = "Path to ISO of Windows VMTools on Host's Datastore"

default = ""

}

variable "vm_notes" {

type = string

description = "Notes for VM Template"

default = ""

}

Next, we’re instructing Packer on how to build out the virtual machine within vSphere based on our defined variables. We also call upon local files in the repository to be injected by Packer which include the all-important Answer File (autounattend.xml) and some Batch/PowerShell scripts.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

#########

# SOURCE

#########

source "vsphere-iso" "win10-pro" {

vcenter_server = var.vsphere_ip

username = var.vsphere_user

password = var.vsphere_pass

insecure_connection = true

datacenter = var.vsphere_dc

datastore = var.vsphere_datastore

cluster = var.vsphere_cluster

folder = var.vsphere_folder

host = var.vcenter_host

vm_name = var.vm_name

guest_os_type = var.vm_guestos

firmware = var.vm_firmware

CPUs = var.vm_cpus

cpu_cores = var.vm_cpucores

RAM = var.vm_ram

floppy_files = [

"autounattend.xml",

"../scripts/Install-VMTools.bat",

"../scripts/WinRM-Config.ps1"

]

cdrom_type = var.vm_cdrom_type

disk_controller_type = var.vm_disk_controller

storage {

disk_size = var.vm_disk_size

disk_thin_provisioned = true

}

network_adapters {

network = var.vm_network

network_card = var.vm_nic

}

notes = var.vm_notes

convert_to_template = true

communicator = "winrm"

winrm_username = var.builder_username

winrm_password = var.builder_password

winrm_timeout = "3h"

iso_paths = [

var.os_iso_path,

var.vmtools_iso_path

]

#iso_url = var.os_iso_url

#iso_checksum = var.os_iso_checksum

remove_cdrom = true

}

Finally, we go into the build process which puts it all together and eventually communicates over WinRM, or SSH if a Linux build, to gracefully shutdown the VM and convert it to a template as defined in the Source section.

1

2

3

4

5

6

7

8

9

10

11

########

# BUILD

########

build {

sources = ["source.vsphere-iso.win10-pro"]

provisioner "windows-shell" {

inline = ["shutdown /s /t 5 /f /d p:4:1 /c \"Packer Shutdown\""]

}

}

For the virtual machine settings itself, these come via variables that are defined in a separate file, win10.pkrvars.hcl, which is shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

#Virtual Machine Settings

vm_name = "Win10Pro-Template"

vm_guestos = "windows9_64Guest"

vm_cpus = "1"

vm_cpucores = "4"

vm_ram = "4096"

vm_disk_controller = ["lsilogic-sas"]

vm_disk_size = "61440"

vm_network = "VM Network"

vm_nic = "e1000e"

vm_notes = "AttackLab-Lite - Windows 10 Pro Template - "

vm_cdrom_type = "sata"

#WinRM Communicator Authentication; based on Autounattend.xml file

builder_username = "Administrator"

builder_password = "P@ssW0rd1!"

#ISO URL (https://gist.github.com/mndambuki/35172b6485e40a42eea44cb2bd89a214) - Windows 10 Enterprise 1909

#os_iso_url = "https://software-download.microsoft.com/download/pr/18362.30.190401-1528.19h1_release_svc_refresh_CLIENTENTERPRISEEVAL_OEMRET_x64FRE_en-us.iso"

#os_iso_checksum = "ab4862ba7d1644c27f27516d24cb21e6b39234eb3301e5f1fb365a78b22f79b3"

#Path to Windows 10 ISO on VM Host

os_iso_path = "[Datastore] ISO/Win10_21H1_English_x64.iso"

os_iso_checksum = "6911e839448fa999b07c321fc70e7408fe122214f5c4e80a9ccc64d22d0d85ea"

#Enable SSH on ESXi host and use WinSCP to browse to "/vmimages/tools-isoimages" and download "windows.iso" from there, then upload to datastore

vmtools_iso_path = "[Datastore] vmtools/windows.iso"

There are Batch scripts and PowerShell scripts that are pretty basic for both Windows 10 and Server 2019. They simply enable WinRM for Packer to be able to communicate with the VM and to install VMware Tools.

CentOS 8 Stream

For CentOS 8 Stream image building with Packer, the components are nearly the same as Windows, with the exception of the instructions that tell the operating system how to set itself up:

- Packer Template File

- Packer Variables File

- vSphere Variables File

- Kickstart Template

Below is the Kickstart file used for building the base CentOS 8 Stream image. The Kickstart file is hosted via a HTTP server, provided by Packer, and is passed as a boot parameter when the CentOS 8 VM is initially created and started. This acts as a set of instructions that tells CentOS how and what to install and configure automagically - kind of like a Windows answer file!

Before reviewing the Kickstart file, let’s take a look at a portion of the centos8-stream.pkrvars.hcl file to see how it differs slightly from the Windows approach. Specifically, specifying the boot parameters via boot_command to pull the Kickstart configuration via Packer’s HTTP server.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

...

...

...

http_port_min = 8601

http_port_max = 8610

http_directory = "http"

boot_order = "disk,cdrom"

boot_wait = "5s"

boot_command = ["<tab><bs><bs><bs><bs><bs><bs> text inst.ks=http://:/ks.cfg<enter><wait>"]

shutdown_command = "echo '${var.builder_password}' | sudo -S -E shutdown -P now"

remove_cdrom = true

...

...

...

The boot_command parameter modifies the initial boot option when booting from the CentOS 8 ISO to invoke inst.ks which tells CentOS that a Kickstart file will be used to drive the installation in an unattended manner. This points to the Kickstart template that is hosted via Packer’s built-in HTTP server.

Boot option being modified by Packer

Boot option being modified by Packer

With the Kickstart file, I learned the hard way that it’s important to ensure perl is installed as part of this process within the post-installation tasks section. Otherwise, Terraform will fail to do some post-deployment provisioning tasks later on, such as setting the hostname and IP addresses of the VM.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

#CentOS 8 Stream - AttackLab-Lite (10/2022)

#Kickstarter Configuration File

#Accept EULA

eula --agreed

#Install from CDROM

cdrom

#Text-based Installation

text

#Use Network Mirror for Packages

url --url="http://mirror.centos.org/centos/8-stream/BaseOS/x86_64/os/"

#Ignore installation of Xorg

skipx

#Disable Initial Setup on First Boot

firstboot --disable

#Set Timezone

timezone America/New_York --isUtc

#Set Language

lang en_US.UTF-8

#Set Keyboard Layout

keyboard --vckeymap=us --xlayouts='us'

#DHCP Network Configuration

network --onboot=yes --device=ens192 --bootproto=dhcp --noipv6 --activate

#Set Hostname

network --hostname=centos8-template

#Disable Firewall

firewall --disabled

#Disable SELinux

selinux --disabled

#Set bootloader to MBR

bootloader --location=mbr

#Partition Scheme (auto, LVM)

autopart --type=lvm

#Initialize Disk

clearpart --linux --initlabel

#Root Password: P@ssW0rd1!

rootpw --iscrypted $6$S.3DjrjF.OEF$6xfMp7j.iVatWKlLQQiSQecOST8tJl8kSju0X2IwDZVTTDGqOULdioDVONKAwJuB.z/fhuH9sd5ocYfrI22N30

#Core CentOS 8 Packages to Install

%packages

@^server-product-environment

%end

#Post-installation - Additional Steps

%post

yum update -y

yum install -y open-vm-tools perl

systemctl enable --now cockpit.socket

systemctl enable --now sshd.service

%end

#Reboot after installation is complete

reboot --eject

I’ve found that building a Kickstart file was much easier and there’s an excellent resource you can reference to help you build your own Kickstart file, like this guide over here.

The root password SHA-512 digest is generated using the mkpasswd command, which is a part of the whois package… not sure why. But, if you install whois in Ubuntu or CentOS, or whatever flavor of Linux, you can create a SHA-512 digest of the root password by issuing the following command:

mkpasswd -m sha-512 password -s "<PASSWORD_HERE>"

With that, you can replace the password in the Kickstart file as needed, but I’d suggest leaving it as-is since this is just a lab environment. If you do change it however, you’ll need to reflect the change in the terraform.tfvars file, the centos8.pkrvars.hcl file, and within the Ansible inventory file as to not break anything.

What about Windows Answer Files?

Glad you asked! Building the autounattend.xml file, or answer file, for Packer to leverage when building out the base Windows 10 and Windows Server 2019 images is essential to ensuring a clean working image that Ansible will take over later, just as the Kickstart template does for CentOS. I had to dust off the old Sysadmin skills for this part as well as review others answer files for inspiration and clarification.

To develop the answer files, we’ll need to enlist the help of some software from Microsoft, which is the Microsoft Assessment and Deployment Kit (ADK) I stood up a Windows 10 virtual machine to install ADK on to generate the answer files for both Windows 10 and Windows Server 2019.

You don’t have to manually create your own answer file, feel free to use the ones available in the GitHub repository or read on if you’re curious. Additionally, there are some answer file generators available online, such as this one. I haven’t tested them, but I don’t see why it wouldn’t work.

Prerequisite Items

Along with the files outlined below, you’ll also need a Windows 10 host to install the ADK software on and extract the relevant file from the ISOs.

Snag the following software to get starting building answer files:

- Microsoft Assessment and Deployment Kit (ADK)

- Windows 10 21H1 Trial ISO (via Archive.org)

- Windows Server 2019 Trial ISO

I suggest using a Windows 10 21H1 ISO as there may be some compatibility issues with the most recent version of Windows 10 and ADK/Windows System Imager Tool.

Extracting the WIM Image (Windows 10, Server 2019)

First, you will extract the relevant WIM image from the ISO. The WIM image is the base installation image and contains all necessary files for an installation of the Windows operating system version (i.e.: Pro vs Home) and allows you to customize the installation using tools in the ADK suite in order to generate those answer files. I could be wrong, but this is my approach to generating those files.

I mount the Windows 10 ISO natively and copy the install.wim file from the ISO, which is found under the D:\sources\ directory. This is assuming the ISO is mounted to D:\ In my case, I copy the install.wim file to C:\Temp\

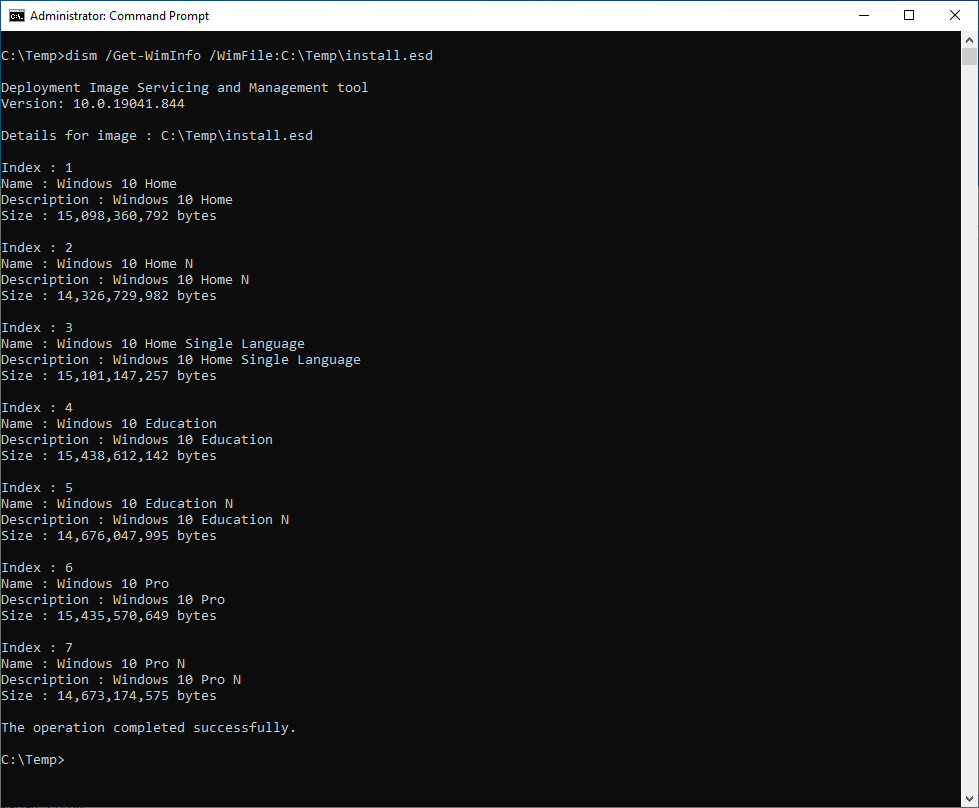

Next, open up PowerShell or Command Prompt as an Administrator and run the following command:

1

dism /Get-WimInfo /WimFile:C:\Temp\install.wim

This will show you the index position for the edition of Windows 10 you want to base your answer file around. In my case, Windows 10 Pro will do just fine, and typically is index number 6. Below is an example of using dism to check the instal.wim file on the Windows 10 ISO that I mounted to my virtual machine.

We do this process for two reasons:

- We use dism to get the Windows 10 Pro index position (6) from the WIM file, which is used later in the Answer File (

amd_64-Microsoft-Windows-Setup_neutral) - We’re making a copy of the WIM image for later use/import into Windows System Image Manager (WISM)

Generating an Answer File for Windows 10

Generating a Windows Answer file is a somewhat complex task, but there are a lot of resources that help break down the process on a deeper level, such as this documentation from Microsoft. I won’t be going into detail on how to build an answer file end-to-end, but will walk through the high-level steps to get started.

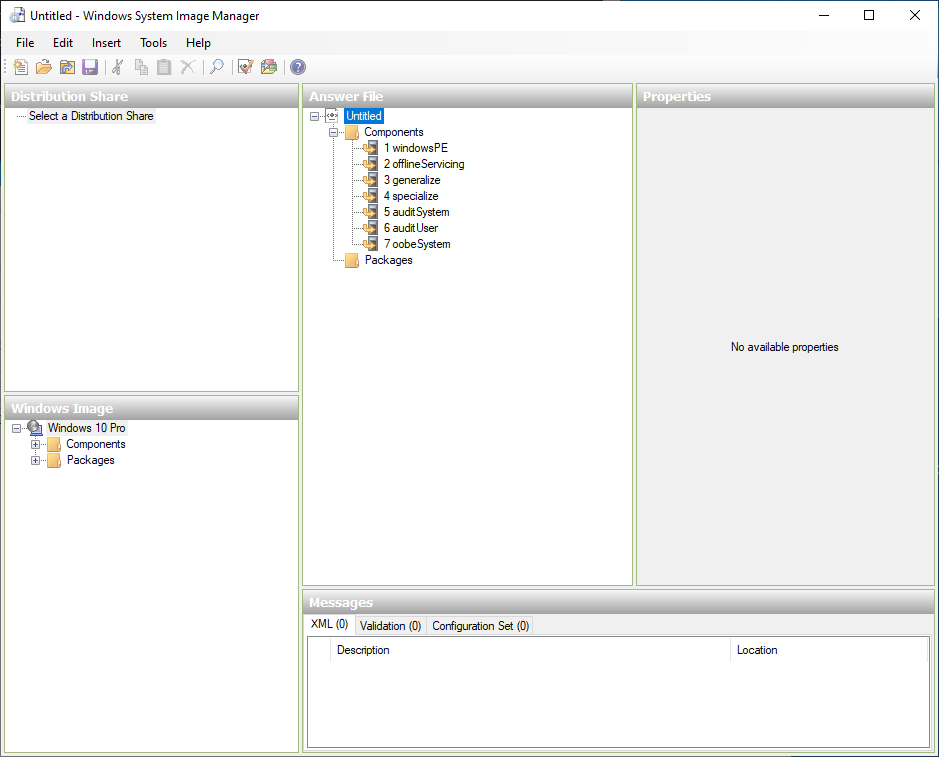

With your Windows 10 Pro WIM image extracted from the ISO, you can now use the Windows System Image Manager you installed earlier through the ADK suite.

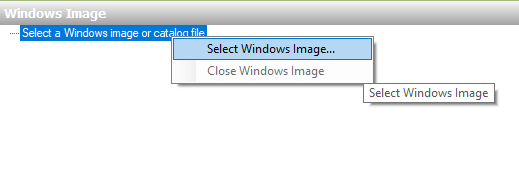

Launch Windows System Image Manager and import your freshly extracted WIM image by right-clicking “Select a Windows image or catalog file” from the bottom-left section titled “Windows Image” and browse to the location of the Windows 10 Pro WIM we extracted earlier.

Upon selecting the WIM image, you may receive a message about a missing catalog file and be requested to have it be created. Accept this by clicking “Yes” and allow WISM to generate the catalog file - this can take several minutes.

After the catalog file has been generated, click “File” and select “New Answer File…“ This will populate content under the “Answer File” section of WISM.

The answer file Components we’ll be focusing on are:

- windowsPE - Windows Preinstallation Environment (Windows PE) is the section used to define settings specific to the Windows installation, such as disk partitioning.

- offlineServicing - During installation, this section allows you to install additional items such as drivers or set different settings to the image to be installed

- specialize - Majority of settings go here as these settings are acknowledged at the beginning of the Out-Of-Box Experience (OOBE) such as executing commands and setting timezone or locale settings

- oobeSystem - Settings here will run after the OOBE process is completed, such as executing additional scripts and commands

Each of the following components above link to Microsoft’s documentation for additional information and reading.

Once complete, click “File” and then “Save Answer File As…“ to save your Answer File.

There are a lot of answer files available on GitHub for Packer and Windows, which you’re free to use. I based mine off of several available examples from GitHub and added a few changes to mine.

One of the changes would be enabling RDP and setting the firewall rule for RDP in the answer file itself within the specialize section of the answer file; see below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

<component name="Microsoft-Windows-TerminalServices-LocalSessionManager" processorArchitecture="amd64" publicKeyToken="31bf3856ad364e35" language="neutral" versionScope="nonSxS" xmlns:wcm="http://schemas.microsoft.com/WMIConfig/2002/State" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<fDenyTSConnections>false</fDenyTSConnections>

</component>

<component name="Networking-MPSSVC-Svc" processorArchitecture="amd64" publicKeyToken="31bf3856ad364e35" language="neutral" versionScope="nonSxS" xmlns:wcm="http://schemas.microsoft.com/WMIConfig/2002/State" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<FirewallGroups>

<FirewallGroup wcm:action="add" wcm:keyValue="RemoteDesktop">

<Active>true</Active>

<Group>Remote Desktop</Group>

<Profile>all</Profile>

</FirewallGroup>

</FirewallGroups>

</component>

<component name="Microsoft-Windows-TerminalServices-RDP-WinStationExtensions" processorArchitecture="amd64" publicKeyToken="31bf3856ad364e35" language="neutral" versionScope="nonSxS" xmlns:wcm="http://schemas.microsoft.com/WMIConfig/2002/State" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<SecurityLayer>2</SecurityLayer>

<UserAuthentication>1</UserAuthentication>

</component>

This gets added via the following components in WISM:

amd64_Microsoft-Windows-TerminalServices-LocalSessionManager_neutralamd64_Microsoft-Windows-TerminalServices-RDP-WinStationExtensions_neutralamd64_Networking-MPSSVC-Svc_neutral

A lot of folks will enable and configure RDP using a PowerShell script or Batch file, but I took the approach of doing so using the answer file. Whether it’s the right or wrong approach, I’m not sure, but it’s giving the results I’m going after.

Feel free to compare and contrast the Answer File in my GitHub repository for both Windows 10 and Server 2019 against WISM so you can see how it’s built out and structured.

Generating an Answer File for Windows Server 2019

Obtain the install.wim from the Windows Server 2019 ISO just like you did with Windows 10 and use the Windows System Image Manager (WISM) tool provided by the ADK suite to create an answer file for Windows Server 2019. Just note, when importing the WIM image from the Windows Server 2019 ISO into WISM, you should select “Windows Server 2019 SERVERSTANDARD” from the selection.

The process is essentially identical to that of Windows 10 Pro and the same answer file is structured and built the same way the Windows 10 Pro answer file is in my environment.

Deploying Infrastructure with Terraform

Terraform is Infrastructure-as-Code (IaC) and allows you to deploy and configure different resources across various platforms, such as deploying virtual machines in cloud environments like AWS or DigitalOcean. I’ve covered Terraform in the previous post in more detail and don’t want to repeat myself.

From the lab perspective, nothing has really changed in the way of Terraform except that virtual machine’s are now deployed in my ESXi/vSphere environment and derive from the Packer images built as described in the previous section. I use Terraform to deploy the following infrastructure:

- 2x Windows Server 2019 VMs (Domain Controller, General Purpose Server)

- 2x Windows 10 Pro VMs (Clients)

- 1x CentOS 8 Stream VM (Elastic Stack)

Kali Linux is also in the lab, but it is deployed manually. I just attach the network interface to my Lab vSwitch so it can interact with the rest of the virtual machines in the lab.

Below is a layout of the Terraform files used for the lab. I keep the numbering of each file as I was used to load order in previous versions of Terraform, but with version 0.12+ that’s no longer the case as Terraform treats all .tf files as a single document - old habits.

1

2

3

4

5

6

7

terraform

├── 01-Win2019.tf

├── 02-Win10.tf

├── 03-CentOS8-Stream.tf

├── provider.tf

├── terraform.tfvars

└── variables.tf

Using the terraform.tfvars file, I define the number of instances of each virtual machine that I’d like to deploy by placing them in a list. By commenting out, adding, or removing, I can control how many to deploy - in the event I only want a Domain Controller for testing, I can opt to exclude the general purpose Windows Server 2019 virtual machine from the deployment. It makes the Ansible aspect later on a bit messy once you start omitting infrastructure, so I tend to keep it as is.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# Windows Server 2019 (DC, General Purpose)

windows-2019 = [

{

vm_name = "LABDC01"

folder = "AD Attack Lab"

vcpu = "2"

memory = "4096"

admin_password = "P@ssW0rd1!"

ipv4_address = "192.168.50.200"

dns_server_list = ["127.0.0.1","192.168.50.1"]

},

{

vm_name = "LABSVR01"

folder = "AD Attack Lab"

vcpu = "2"

memory = "4096"

admin_password = "P@ssW0rd1!"

ipv4_address = "192.168.50.100"

dns_server_list = ["192.168.50.200","192.168.50.1"]

}

]

This is used in conjunction with the 01-Win2019.tf file which will iterate through the terraform.tfvars file to enumerate how many Windows Server 2019 virtual machines are being requested based on the windows-2019 = [] list as shown above. This will be shown in more detail later in the post.

Below is an example of building out the Windows Server 2019 virtual machine resource in the 01-Win2019.tf file. Pay close attention to the repeating value throughout the file…

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

resource "vsphere_virtual_machine" "windows-2019" {

count = length(var.windows-2019)

name = lookup(var.windows-2019[count.index], "vm_name")

datastore_id = data.vsphere_datastore.datastore.id

resource_pool_id = data.vsphere_compute_cluster.cluster.resource_pool_id

num_cpus = lookup(var.windows-2019[count.index], "vcpu")

memory = lookup(var.windows-2019[count.index], "memory")

guest_id = data.vsphere_virtual_machine.Win2019-Template.guest_id

scsi_type = data.vsphere_virtual_machine.Win2019-Template.scsi_type

firmware = data.vsphere_virtual_machine.Win2019-Template.firmware

folder = lookup(var.windows-2019[count.index], "folder")

network_interface {

network_id = data.vsphere_network.network.id

adapter_type = data.vsphere_virtual_machine.Win2019-Template.network_interface_types[0]

}

disk {

label = "disk0"

size = data.vsphere_virtual_machine.Win2019-Template.disks.0.size

eagerly_scrub = data.vsphere_virtual_machine.Win2019-Template.disks.0.eagerly_scrub

thin_provisioned = data.vsphere_virtual_machine.Win2019-Template.disks.0.thin_provisioned

}

clone {

template_uuid = data.vsphere_virtual_machine.Win2019-Template.id

customize {

timeout = 20

windows_options {

computer_name = lookup(var.windows-2019[count.index], "vm_name")

admin_password = lookup(var.windows-2019[count.index], "admin_password")

auto_logon = true

auto_logon_count = 1

time_zone = 035

#Download and execute ConfigureRemotingforAnsible (WinRM)

run_once_command_list = [

"powershell.exe [Net.ServicePointManager]::SecurityProtocol=[Net.SecurityProtocolType]::Tls12; Invoke-WebRequest -Uri https://raw.githubusercontent.com/ansible/ansible/devel/examples/scripts/ConfigureRemotingForAnsible.ps1 -Outfile C:\\WinRM_Ansible.ps1",

"powershell.exe -ExecutionPolicy Bypass -File C:\\WinRM_Ansible.ps1"

]

}

network_interface {

ipv4_address = lookup(var.windows-2019[count.index], "ipv4_address")

ipv4_netmask = 24

}

ipv4_gateway = lookup(var.windows-2019[count.index], "ipv4_gateway")

dns_server_list = lookup(var.windows-2019[count.index], "dns_server_list")

}

}

}

Notice the many instances of lookup(var.windows-2019[count.index] littered throughout the file. This is referencing the total index count of the windows-2019 = [] list from the terraform.tfvars file. For each resource declared in the list, it will build it out to specification based on the number of vCPUs, vRAM, and so on. The same rules apply for the CentOS 8 and Windows 10 virtual machines that get deployed via Terraform - they’re declared as well in the terraform.tfvars file and have their own respective Terraform file to deploy from.

I love Terraform for infrastructure automation, whether for Red Team infrastructure, CTF environments, or my home lab. You can also use Terraform to provision your infrastructure post-deployment. In my case, however, I’ll be using Ansible for post-deployment tasks.

Post-Deployment Activities with Ansible

After Terraform has deployed the base infrastructure, I leverage Ansible to perform post-deployment tasks. This stage includes configuring the systems in the deployment, installing software, and executing additional scripts.

Using Ansible, I created playbooks to install software via Chocolatey on the Windows hosts and other software such as the components required for the Elastic Stack on the CentOS 8 virtual machine using native yum package management, as well as copy over core files and make other configuration changes to the systems.

I use Ansible in the environment because as I mentioned before, it’s easier to change a few lines of code in the playbooks than to bake software installations in during the build process with Packer. Again, that’s because in the event of a need to add or remove software, we need to start from square one again and I’d rather have the process as linear as possible. Even post-deployment and post-provisioning, I can always re-run a (modified) playbook later if I need to.

The layout of the Ansible playbooks looks like the following:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

ansible

├── ansible.cfg

├── attacklab.yml

├── inventory.yml

└── roles

├── active-directory

│ ├── tasks

│ │ └── main.yml

│ └── vars

│ └── main.yml

├── elasticstack

│ ├── files

│ │ ├── elasticsearch.repo

│ │ ├── input-beat.conf

│ │ └── output-elasticsearch.conf

│ ├── tasks

│ │ └── main.yml

│ └── vars

│ └── main.yml

├── join-domain

│ ├── tasks

│ │ └── main.yml

│ └── vars

│ └── main.yml

├── vulnerable-ad

│ ├── files

│ │ ├── LDAP_NULL_Bind.ps1

│ │ └── Vulnerable-AD.ps1

│ ├── tasks

│ │ └── main.yml

│ └── vars

│ └── main.yml

└── windows-init

├── files

│ ├── bginfo.bat

│ ├── lab_bginfo.bgi

│ └── sysmon.xml

├── tasks

│ └── main.yml

└── vars

└── main.yml

Roles and Playbooks

There are five (5) different roles that break out into core playbooks for the lab environment:

windows-init- This is used on all Windows-based system. It installs software via Chocolatey and copies over some files, such as the Sysmon configuration and Bginfo configuration

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

---

- name: Disable IPv6

win_shell: reg add HKLM\SYSTEM\CurrentControlSet\Services\Tcpip6\Parameters /v DisabledComponents /t REG_DWORD /d 255 /f

- name: Create LabFiles folder in C:\

win_file:

path: C:\LabFiles\

state: directory

- name: Install Chocolatey for Package Management

win_chocolatey:

name:

- chocolatey

- chocolatey-core.extension

state: present

- name: Install bginfo

win_chocolatey:

name: bginfo

state: present

- name: Copy bginfo Configuration File

win_copy:

src: "files/lab_bginfo.bgi"

dest: C:\LabFiles\lab_bginfo.bgi

- name: Copy bginfo Startup Script

win_copy:

src: "files/bginfo.bat"

dest: "C:\\ProgramData\\Microsoft\\Windows\\Start Menu\\Programs\\Startup\\bginfo.bat"

- name: Start bginfo with Configuration

win_shell: C:\ProgramData\chocolatey\bin\Bginfo64.exe C:\LabFiles\lab_bginfo.bgi /SILENT /NOLICPROMPT /TIMER:0

- name: Install Sysmon

win_chocolatey:

name: sysmon

state: present

- name: Copy Sysmon Configuration File (@SwiftOnSecurity)

win_copy:

src: "files/sysmon.xml"

dest: C:\LabFiles\sysmon.xml

- name: Install, Configure, and Enable Sysmon64

win_shell: C:\ProgramData\chocolatey\bin\Sysmon64.exe -accepteula -i C:\LabFiles\sysmon.xml

ignore_errors: yes

- name: Install Sysinternals Suite

win_chocolatey:

name: sysinternals

state: present

- name: Create Sysinternals Shortcut

win_shortcut:

src: C:\ProgramData\chocolatey\lib\sysinternals\tools

dest: C:\LabFiles\Sysinternals.lnk

- name: Download Elastic Agent ()

win_get_url:

url: https://artifacts.elastic.co/downloads/beats/elastic-agent/elastic-agent--windows-x86_64.zip

dest: C:\LabFiles\elastic-agent--windows-x86_64.zip

- name: Extract Elastic Agent ZIP Archive

win_shell: Expand-Archive -LiteralPath 'C:\LabFiles\elastic-agent--windows-x86_64.zip' -DestinationPath C:\LabFiles\

- name: Delete Elastic Agent ZIP File

win_file:

path: C:\LabFiles\elastic-agent--windows-x86_64.zip

state: absent

active-directory- This role is dedicated to the Domain Controller and sets up Active Directory Domain Services

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

---

- name: Install Active Directory Domain Services (AD DS)

win_feature:

name: AD-Domain-Services

include_management_tools: yes

include_sub_features: yes

state: present

register: adds_installed

- name: Create new Domain in a new Forest ()

win_domain:

dns_domain_name: ""

safe_mode_password: ""

install_dns: yes

domain_mode: Win2012R2

domain_netbios_name: ""

forest_mode: Win2012R2

sysvol_path: C:\Windows\SYSVOL

database_path: C:\Windows\NTDS

register: dc_promo

- name: Restart Server

win_reboot:

msg: "Active Directory installed via Ansible - rebooting..."

pre_reboot_delay: 10

when: dc_promo

- name: Waiting for reconnect after reboot...

wait_for_connection:

delay: 60

timeout: 1800

- name: Add DNS A record for LABELK01

win_shell: Add-DnsServerResourceRecordA -Name labelk01 -ZoneName lab.local -AllowUpdateAny -IPv4Address -TimeToLive 01:00:00

vulnerable-ad- This role is dedicated to the Domain Controller and executes two different PowerShell scripts; Vulnerable-AD and a custom script intended to make NULL LDAP binds possible

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

---

- name: Sleep for 5 minutes post-AD Install

pause:

minutes: 5

- name: Copy 'Vulnerable-AD.ps1' to C:\LabFiles

copy:

src: "files/Vulnerable-AD.ps1"

dest: C:\LabFiles\Vulnerable-AD.ps1

- name: Copy 'LDAP_NULL_Bind.ps1' to C:\LabFiles

copy:

src: "files/LDAP_NULL_Bind.ps1"

dest: C:\LabFiles\LDAP_NULL_Bind.ps1

- name: Make LDAP NULL Bind Possible

win_shell: C:\\LabFiles\\LDAP_NULL_Bind.ps1

- name: Import VulnAD Module and Invoke-VulnAD

win_shell: |

Import-Module C:\LabFiles\Vulnerable-AD.ps1

Invoke-VulnAD -UsersLimit 100 -DomainName ""

ignore_errors: true

join-domain- This role is used on all Windows 10 clients as well as the generic Windows Server 2019 VM. It will join these machines to the lab domain

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

---

- name: Join client to Attack Lab Domain ()

win_domain_membership:

dns_domain_name: ""

domain_admin_user: administrator@

domain_admin_password: P@ssW0rd1!

state: domain

register: domain_state

- name: Restart Client

win_reboot:

msg: "Client joined to Active Directory - rebooting..."

pre_reboot_delay: 10

when: domain_state.reboot_required

- name: Waiting for reconnect after reboot...

wait_for_connection:

delay: 60

timeout: 1800

elasticstack- This role is dedicated to the Elastic Stack VM which installs and configures Elasticsearch, Logstash, and Kibana. It also enrolls Kibana into Elasticsearch and auto-resets theelasticuser password

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

---

- name: Download & Extract Elastic Agent TAR ()

shell:

"cd /root && wget https://artifacts.elastic.co/downloads/beats/elastic-agent/elastic-agent--linux-x86_64.tar.gz && tar -xzvf elastic-agent--linux-x86_64.tar.gz"

- name: Delete Elastic Agent TAR File

file:

path: /root/elastic-agent--linux-x86_64.tar.gz

state: absent

- name: Copy Elastic Repo File

copy:

src: "files/elasticsearch.repo"

dest: /etc/yum.repos.d/elasticsearch.repo

- name: Update /etc/hosts file

lineinfile:

dest: /etc/hosts

regexp: '^127\.0\.0\.1[ \t]+localhost'

line: '127.0.0.1 labelk01 localhost'

- name: Add Elasticsearch key

shell:

"rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch"

- name: Install Elasticsearch

yum:

name: elasticsearch

enablerepo: "elasticsearch"

- name: Install Kibana

yum:

name: kibana

enablerepo: "elasticsearch"

- name: Install Logstash

yum:

name: logstash

enablerepo: "elasticsearch"

- name: Modifying elasticsearch.yml (network.host)

lineinfile:

path: /etc/elasticsearch/elasticsearch.yml

regexp: '^#network.host'

line: 'network.host: '

- name: Modifying elasticsearch.yml (http.port)

lineinfile:

path: /etc/elasticsearch/elasticsearch.yml

regexp: '^#http.port'

line: 'http.port: 9200'

- name: Ignore 'cluster.initial_master_nodes' line

lineinfile:

path: /etc/elasticsearch/elasticsearch.yml

regexp: '^cluster.initial_master_nodes'

line: '#cluster.initial_master_nodes: ["LABELK01"]'

- name: Modifying elasticsearch.yml (discovery.type)

lineinfile:

path: /etc/elasticsearch/elasticsearch.yml

line: 'discovery.type: single-node'

- name: Modifying kibana.yml (server.port)

lineinfile:

path: /etc/kibana/kibana.yml

regexp: '^#server.port'

line: "server.port: 5601"

- name: Modifying kibana.yml (server.host)

lineinfile:

path: /etc/kibana/kibana.yml

regexp: '^#server.host'

line: 'server.host: ""'

- name: Modifying kibana.yml (server.publicBaseUrl)

lineinfile:

path: /etc/kibana/kibana.yml

regexp: '^#server.publicBaseUrl'

line: 'server.publicBaseUrl: "http://:5601"'

- name: Modifying kibana.yml (elasticsearch.hosts)

lineinfile:

path: /etc/kibana/kibana.yml

regexp: '^#elasticsearch.hosts'

line: 'elasticsearch.hosts: ["http://:9200"]'

- name: Copy Logstash configuration file - input-beat.conf

copy:

src: "files/input-beat.conf"

dest: /etc/logstash/conf.d/input-beat.conf

- name: Copy Logstash configuration file - output-elasticsearch.conf

copy:

src: "files/output-elasticsearch.conf"

dest: /etc/logstash/conf.d/output-elasticsearch.conf

- name: Reloading daemons

systemd:

daemon_reload: yes

- name: Enable Elasticsearch on Startup

systemd:

name: elasticsearch

enabled: yes

- name: Enable Logstash on Startup

systemd:

name: logstash

enabled: yes

- name: Enable Kibana on Startup

systemd:

name: kibana

enabled: yes

- name: Start Elasticsearch Service

systemd:

name: elasticsearch

state: started

- name: Start Logstash Service

systemd:

name: logstash

state: started

- name: Start Kibana Service

systemd:

name: kibana

state: started

- name: Generate Kibana Enrollment Token

shell:

"/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -s kibana --url \"https://:9200\""

register: enrollment_token

#- debug:

# var: enrollment_token.stdout_lines

- set_fact:

token=

- name: Enroll Kibana in Elasticsearch

shell:

"/usr/share/kibana/bin/kibana-setup --enrollment-token "

# register: enrollment

#- debug:

# var: enrollment.stdout_lines

- name: Reset 'elastic' Password

shell:

"/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic -b"

register: elastic_password

- debug:

var: elastic_password.stdout_lines

- name: Restart Kibana Service

systemd:

name: kibana

state: restarted

Variables that are called, such as the or variables, are found within each Role’s vars/ directory. It’s a bit redundant, and I’m sure there’s a better way to define this, along with other variables, but for now it’ll do.

Elastic Stack Playbook

The primary (and only) playbook I current have set up for the CentOS 8 server is to install and perform a preliminary setup of the Elastic Stack (or ELK) - Elasticsearch, Logstash, and Kibana, which is the last one shown above. I use Elastic Stack to see what logging looks like from the Blue Team point of view with each attack, tool, command, or payload executed.

Elastic’s Fleet is leveraged in this installation of the Elastic Stack to manage Elastic Agents that will be installed on the Windows systems in the lab environment. Through the Ansible roles, the latest version of the Elastic Agent is present on each Windows virtual machine, however, automating the installation isn’t something I’ve tackled and requires manually installing and registering the agents with the server. We’ll cover that later in the post-provisioning activities section.

Installing Packer, Terraform, and Ansible

Obviously to make all of this work, you’re going to need the requisite tools installed.

You’re free to install these tools where you see fit, but for the sake of keeping things together, I’m installing Packer, Terraform, and Ansible on an Ubuntu virtual machine. I’ve found it’s especially beneficial to have Packer installed on it’s own system for one main reason that I’ll also mention in the CI/CD blog post later, which is:

- Both Windows Subsystem Linux (WSL) and Windows did not honor the primary network interface of the machine. This seems to be the case especially when you have multiple network interfaces from VMware Workstation or VirtualBox installations, which causes WinRM communications and hosting the CentOS Kickstart template to fail - see an example GitHub issue here

Installing Terraform, Packer, Ansible on Ubuntu

Refer to Hashicorp’s documentation on installing Terraform and Packer on Ubuntu here, and Ansible’s documentation for installing here. For a consolodation of the commands to install Packer, Terraform, and Ansible on an Ubuntu/Debian-bashed host, see below:

1

2

3

4

5

6

7

8

sudo apt-get update && sudo apt-get install -y gnupg software-properties-common gpg

wget -O- https://apt.releases.hashicorp.com/gpg | gpg --dearmor | sudo tee /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update

sudo apt install terraform packer

sudo apt-add-repository ppa:ansible/ansible

sudo apt update

auso apt install ansible

Installing Terraform & Packer on Windows

1) Download the Windows Packer and Terraform binaries from the official Hashicorp repositories

2) Create a folder in C:\ called Hashicorp (C:\Hashicorp)

3) Place the downloaded Packer and Terraform binaries into the C:\Hashicorp folder

4) Open “Control Panel” and search for “System” and select “Edit the system environment variables”

5) In the “System Properties” window, under the “Advanced” tab, click the “Environment Variables…” button

6) Under the “System variables” section, scroll down until you find Path and click “Edit…“

7) Click “New” and add the path you created earlier - C:\Hashicorp and click “OK”

8) Click “OK” on all remaining windows

9) Open the Command Prompt and type in packer.exe and terraform.exe and ensure both execute

Installing Ansible on Windows

You will need to enable Windows Subsystem Linux (WSL) and install Ansible within that environment. Here is a good tutorial (Method 3) on how to enable WSL on Windows 10/Windows 11 and install Ansible.

Putting it All Together - Deploying the Lab

Below are examples of going through each stage manually. The following assumes you are using these tools on a Linux-based system, but are used the same on Windows; just directory path differences, is all!

This is a high-level of going through the stages to deploy and provision the lab environment. The Wiki on the AttackLab-Lite GitHub goes into more detail, so feel free to refer to it if the following sections aren’t clear enough.

Don’t forget to upload your Windows VMware Tools, CentOS 8, Windows 10, and Windows Server 2019 ISO files to your ESXi datastore!

Packer - Building VM Images

Before jumping into building your base images with Packer, you’ll need to modify the variable files for each Packer template, which can be found in the following paths:

packer/Win10/win10.pkrvars.hclpacker/Win2019/win2019.pkrvars.hclpacker/CentOS8/centos8-stream.pkrvars.hcl

Adjust virtual machine settings such as vRAM, vCPU, network interfaces, and especially your datastore name/paths, and ISO filenames.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

#Virtual Machine Settings

vm_name = "Win10Pro-Template"

vm_guestos = "windows9_64Guest"

vm_cpus = "1"

vm_cpucores = "4"

vm_ram = "4096"

vm_disk_controller = ["lsilogic-sas"]

vm_disk_size = "61440"

vm_network = "VM Network"

vm_nic = "e1000e"

vm_notes = "AttackLab-Lite - Windows 10 Pro Template - "

vm_cdrom_type = "sata"

#WinRM Communicator Authentication; based on Autounattend.xml file

builder_username = "Administrator"

builder_password = "P@ssW0rd1!"

#ISO URL (https://gist.github.com/mndambuki/35172b6485e40a42eea44cb2bd89a214) - Windows 10 Enterprise 1909

#os_iso_url = "https://software-download.microsoft.com/download/pr/18362.30.190401-1528.19h1_release_svc_refresh_CLIENTENTERPRISEEVAL_OEMRET_x64FRE_en-us.iso"

#os_iso_checksum = "ab4862ba7d1644c27f27516d24cb21e6b39234eb3301e5f1fb365a78b22f79b3"

#Path to Windows 10 ISO on VM Host

os_iso_path = "[Datastore] ISO/Win10_21H1_English_x64.iso"

os_iso_checksum = "6911e839448fa999b07c321fc70e7408fe122214f5c4e80a9ccc64d22d0d85ea"

#Enable SSH on ESXi host and use WinSCP to browse to "/vmimages/tools-isoimages" and download "windows.iso" from there, then upload to datastore

vmtools_iso_path = "[Datastore] vmtools/windows.iso"

Again, it’s important at this stage that the OS and VMware Tools ISO files are uploaded to your ESXi datastore and that you’ve reflected those changes in these variable files.

The file vsphere.pkvars.hcl, which is the vSphere variables file and is located in each operating system’s Packer directory also needs to be modified. Specifically, you’ll need to populate your vSphere instance IP/FQDN, username, password, Datacenter, Cluster, and Datastore information.

1

2

3

4

5

6

7

8

#vSphere Settings

vsphere_ip = ""

vsphere_username = ""

vsphere_password = ""

vsphere_dc = ""

vsphere_datastore = ""

vsphere_cluster = ""

vsphere_folder = ""

Below are the commands to build each image:

1

2

cd packer/Win2019

packer build -force -var-file win2019.pkrvars.hcl -var-file vsphere.tfvars.hcl win2019.pkr.hcl

1

2

cd packer/Win10

packer build -force -var-file win10.pkrvars.hcl -var-file vsphere.pkrvars.hcl win10.pkr.hcl

1

2

cd packer/CentOS8

packer build -force -var-file centos8-stream.pkvars.hcl -var-file vsphere.pkvars.hcl centos8-stream.pkr.hcl

Depending on your available resources, it should take Packer about 10-15 minutes to build each of the VM templates. After Packer has completed creating VM templates, it’s time to move on to deploying the infrastructure from these templates using Terraform.

Terraform - Deploying Infrastructure

Before deploying the infrastructure with Terraform, you’ll need to edit a few files, beginning with the variables.tf file.

In this file, you will need to reflect changes for your ESXi/vSphere environment such as your:

- ESXi host IP or FQDN

- Datacenter

- Target Datastore

- Target Network (i.e.: vSwitch)

- Cluster Name

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

variable "vsphere_username" {

description = "vSphere user account"

type = string

}

variable "vsphere_password" {

description = "Password for vSphere user account"

type = string

}

variable "vsphere_server" {

description = "IP address or FQDN of vSphere server"

type = string

default = "x.x.x.x"

}

variable "datacenter_name" {

description = "Datacenter Name to deploy VM resources to within vSphere"

type = string

default = "Your-Datacenter"

}

variable "datastore_name" {

description = "Name of Datastore where VM resources will be saved to"

type = string

default = "Your-Datastore"

}

variable "network_name" {

description = "Network that VM resources will be connected to (i.e.: vSwitch)"

type = string

default = "Your-VM-Network"

}

variable "cluster_name" {

description = "Name of Cluster in vSphere where VM resources will be deployed to"

type = string

default = "Your-Cluster-Name"

}

variable "windows-2019" {

description = "Predefined values for deploying multiple Windows Server 2016 VM's with different requirements - see terraform.tfvars"

type = list

default = [ ]

}

variable "windows-10" {

description = "Predefined values for deploying multiple Windows 10 VM's with diffierent requirements - see terraform.tfvars"

type = list

default = [ ]

}

variable "centos8" {

description = "Predefined values for deploying multiple CentOS 8 VM's with different requirements - see terraform.tfvars"

type = list

default = [ ]

}

Next, you may also want to make some changes to some parameters in the terraform.tfvars file to reflect your network schema, along with other variables such as vRAM, vCPU, and so on.

vm_name- This will become the effective hostname of the VM which Terraform will provision during deployment folder - Folder within vSphere where the VM resources will be organized into; make sure you’ve created this folder manually within vSphere firstipv4_address- Static IP address for the virtual machine which Terraform will provision during deploymentipv4_gateway- Default gateway for your network which Terraform will provision during deploymentdns_server_list- Primary and secondary DNS IP addresses which Terraform will provision during deployment; for the Domain Controller, keep127.0.0.1as the primary DNS IP

Modifying domain and dns_suffix is optional - I’d recommend leaving the domain names in place for Ansible’s sake later on.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

# Windows Server 2019 (DC, General Purpose)

windows-2019 = [

{

vm_name = "LABDC01"

folder = "AD Attack Lab"

vcpu = "2"

memory = "4096"

admin_password = "P@ssW0rd1!"

ipv4_address = "192.168.50.200"

ipv4_gateway = "192.168.50.1"

dns_server_list = ["127.0.0.1","192.168.50.1"]

},

{

vm_name = "LABSVR01"

folder = "AD Attack Lab"

vcpu = "2"

memory = "4096"

admin_password = "P@ssW0rd1!"

ipv4_address = "192.168.50.100"

ipv4_gateway = "192.168.50.1"

dns_server_list = ["192.168.50.200","192.168.50.1"]

}

]

# Windows 10 Endpoints

windows-10 = [

{

vm_name = "WIN10LAB-01"

folder = "AD Attack Lab"

vcpu = "4"

memory = "4096"

admin_password = "P@ssW0rd1!"

ipv4_address = "192.168.50.50"

ipv4_gateway = "192.168.50.1"

dns_server_list = ["192.168.50.200","192.168.50.1"]

},

{

vm_name = "WIN10LAB-02"

folder = "AD Attack Lab"

vcpu = "4"

memory = "4096"

admin_password = "P@ssW0rd1!"

ipv4_address = "192.168.50.51"

ipv4_gateway = "192.168.50.1"

dns_server_list = ["192.168.50.200","192.168.50.1"]

}

]

# CentOS 8 Server (ELK)

centos8 = [

{

vm_name = "LABELK01"

folder = "AD Attack Lab"

vcpu = "4"

memory = "8000"

ipv4_address = "192.168.50.10"

ipv4_gateway = "192.168.50.1"

dns_server_list = ["192.168.50.200","192.168.50.1"]

dns_suffix_list = ["lab.local"]

domain = "lab.local"

}

]

You can pass your vSphere username and password into the command below, set them as environment variables, or put them in the variables.tf file directly. I’m not going for security and secrets-management-best-practices here since it’s just a lab environment.

1

2

3

cd terraform

terraform init .

terraform apply --var="vsphere_username=$VSPHERE_USERNAME" --var="vsphere_password=$VSPHERE_PASSWORD" --auto-approve

Again, depending on resources, deployment of the VM templates as outlined above (5 VM resources) takes about 10 minutes.

Ansible - Provisioning Infrastructure

Before getting started with Ansible, you’ll need to make modifications to the inventory file - specifically, the IP addresses.

Modify the inventory.yml file and adjust the IP addresses in accordance with your network schema and as they were also defined in the terraform.tfvars file from the previous section.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

---

lab_dc:

hosts:

#labdc01.lab.local

192.168.50.200:

vars:

ansible_user: Administrator

ansible_password: P@ssW0rd1!

ansible_connection: winrm

ansible_winrm_server_cert_validation: ignore

lab_server:

hosts:

#labsvr01.lab.local

192.168.50.100:

vars:

ansible_user: Administrator

ansible_password: P@ssW0rd1!

ansible_connection: winrm

ansible_winrm_server_cert_validation: ignore

lab_clients:

hosts:

#win10-01.lab.local

192.168.50.50:

#win10-02.lab.local

192.168.50.51:

vars:

ansible_user: Administrator

ansible_password: P@ssW0rd1!

ansible_connection: winrm

ansible_winrm_server_cert_validation: ignore

lab_elastic:

hosts:

#labelk01.lab.local

192.168.50.10:

vars:

ansible_user: root

ansible_password: P@ssW0rd1!

ansible_connection: ssh

ansible_ssh_common_args: '-o StrictHostKeyChecking=no'

You will also likely need to modify the elastic_ip and the elastic_ip_address variables to reflect your network schema, too. Remember, this should align with what was provided to Terraform earlier in the terraform.tfvars file. The variable files can be found in the following paths:

ansible/roles/active-directory/vars/main.ymlansible/roles/elasticstack/vars/main.yml

1

2

3

4

lab_domain_name: lab.local

lab_netbios_name: LAB

lab_domain_recovery_password: P@ssW0rd1!

elastic_ip_address: 192.168.50.10

1

elastic_ip: 192.168.50.10

To provision each aspect of the infrastructure, we’ll use the command referenced below to run the Ansible playbook to provision our Windows 10, Windows Server 2019, and CentOS 8 virtual machines.

1

2

cd ansible/

ansible-playbook -i inventory.yml attacklab.yml

If for some reason you modify or need to re-run Ansible against a specific host (or set of hosts), you can rely on the tags that have been added to the attacklab.yml playbook to narrow the scope. The following tags are available:

dc- Domain Controller Roleswinserver- Generic Windows Server Rolesclients- Windows 10 Client Roleselk- Elastic Stack Server Roles

To run any given set of roles against a specific tag, use the following command as an example:

1

2

cd ansible/

ansible-playbook -i inventory.yml attacklab.yml --tags "elk"

When Ansible begins to run the Elastic Stack tasks, pay close attention to the final output as it provides the password for the elastic user that you’ll use to log into Kibana with later. The tasks associated with the Elastic Stack run last, so the following output example should be one of the final messages provided by Ansible.

1

2

3

4

5

6

7

8

9

10

TASK [elasticstack : Reset 'elastic' Password] ****************************************************************************************************************************

changed: [192.168.50.10]

TASK [elasticstack : debug] ***********************************************************************************************************************************************

ok: [192.168.50.10] => {

"elastic_password.stdout_lines": [

"Password for the [elastic] user successfully reset.",

"New value: *NTsfc0IArTXRuvteWFJ"

]

}

Manual Post-Provisioning Activities

There are some manual activities that need to be done post-deployment and post-provisioning. Specifically, installing and configuring Fleet Server on the Elastic Stack and enrolling the Elastic Agents on the Windows 10 and Windows Server 2019 virtual machines into the Elastic Stack. This is so we can ensure all the logs we want are forwarded from our lab machines to the Elastic Stack for later viewing… and dashboards!

Consult the AttackLab-Lite Wiki for detailed steps on how to setup and configure the initial Fleet Server and enroll Windows Elastic Agents into the Fleet Server/Elastic Stack for monitoring.

Retrospective

There are a lot of things I want to improve upon and expand upon within the AttackLab-Lite infrastructure to make it more robust. This post focused more on the DevOps side of getting the lab environment up and running using various tools. There’s more I’d like to incorporate and do to make this more misconfigured and full of real-world telemetry and behaviors.

- I could probably improve on the Ansible layout, but it’s functional for now!

- Create a custom script to populate and misconfigure Active Directory to my needs; Vulnerable-AD and BadBlood are great, but the more I can customize, the better - like the PowerShell script I put together to allow NULL LDAP binds

- Develop simulation scripts or agents to emulate specific end user activity; I know some of these exist out there, but some haven’t been updated in a while. Besides, it’s something to work towards!

- Incorporate the free Splunk Enterprise trial - automating installation/configuration of Splunk Universal Forwarders (UFs) is much more promising

- Incorporate pfSense as the central router/firewall for the environment - do some Network Security Monitoring (NSM); looking at you, Zeek!

- How do I get Wireshark and WinPcap to install nicely with Chocolatey!?