Journey into Building a K3s Cluster... so far!

Introduction

I decided I wanted to learn the daunting topic of Kubernetes, K3s, Pods, things. Don’t get me wrong, I’m still learning. Out of all of the things I have tackled in my career from being a Sysdmin to a Red Teamer and everything in between, I have never in my life felt like the peak of following image while toying around with K3s.

The amount of respect I have for DevOps folks - unmatchable.

Actual picture of me playing with anything Kubernetes

Actual picture of me playing with anything Kubernetes

I figured I’d recount my experience (so far) with getting to learn more about Kubernetes, K3s, Rancher, Longhorn, Manifests, Deployments, Pods, Services, and other related buzzwords.

The cool thing is, you’re reading this post which is running on a Ghost Blog deployment on a K3s cluster in my office, tunneling out to the Internet via Cloudflare. Neat.

What is K3s and why a Cluster?

K3s, or lightweight Kubernetes, is a fully certified Kubernetes (K8s) distribution. It’s footprint is much smaller than traditional Kubernetes binary and is used in more specific use cases such as IoT and embedded systems. The original developers behind K3s, Rancher, apparently removed some 3 billion lines of code from K8S to create a more streamlined and efficient Kubernetes distribution that relies on only the bare necessities to run.

So, why a cluster of K3s nodes? In the context of the home lab, its more similar to real-world implementations of Kubernetes and provides, in my opinion, a more holistic learning experience. From the context of being a nerd, because clusters and more hardware in the server rack - I could have easily deployed a few virtual machines in my ESXi environment and achieved the same thing, but where’s the fun in that? This also gives me an opportunity to learn something new outside the realm of security, although this will likely head down that path regardless.

K3s Cluster + Raspberry Pi’s = Nope!

It’s doesn’t really equal “nope” because it actually would have been my preferred option for this project. If you’re a nerd and like to have a stack of Raspberry Pi’s laying around for different projects, then you’re no stranger to the shortage of them and, like GPUs in 2020 through 2021, the price gouging from folks reselling them. If you’re from the future and Raspberry Pi’s are affordable again, just know that when this was written they were impossible to get your hands on them unless you wanted to pay way over MSRP on eBay.

I intended to start my journey into K3s by purchasing a handful of Pi 4’s and clustering them together. Given the market, I found an alternative - not quite as small as a Raspberry Pi, but still on the smaller side and not terrible with power consumption either.

Enter five (5) Dell Optiplex 7050 Mini Form Factor (MFF) PCs from eBay!

- Intel Core i5 7500T

- 8GB RAM (2x 4GB)

- 120GB SATA SSD (post-purchase)

At a total cost of about $500.00 for all five MFF’s, it would cost me almost the same to purchase 5 Raspberry Pi 4’s with 4GB of RAM each in the current market. The upside here is that I’m getting more compute for the dollar and this hardware can allow for different projects in the future that have a higher compute demand, if I decide to ever disassemble the K3S cluster.

Only caveat here was the MFF’s were missing storage. Surprisingly, these 7050’s take SATA and M.2 storage. Off to Amazon I went and purchased five 120GB SSD’s by PNY at a whopping $14.99 per drive.

This might be an overkill approach to building a K3s cluster, considering what I’m hosting in the cluster at the moment, but if I decide Kubernetes is not for me one day I can always repurpose the hardware for another project.

K3s Cluster Architecture

For my setup, my K3s cluster is comprised of the following services and components:

Monitoring with Grafana & Prometheus

For monitoring the cluster and its various components, such as nodes themselves, whole deployments, or individual PodsLonghorn

Used to manage storage (PV/PVC) and allows for backing up and restoring data to/from AWS S3 or NFS storageMetalLB

Exposing services to the network directly which can then be used for port-forwarding for that self-hosting fan - I prefer Cloudflare tunnelsRancher

The orchestrator behind the entire cluster deployment and management of resources within the cluster - currently running as a Docker container on a separate virtual machine within my ESXi environment.

My cluster is comprised of 2 Master Nodes (Control Plane) and 3 Worker Nodes all managed via Rancher - which provides a nice WebUI for navigating your cluster, namespaces, deployments and so on. Rancher also provides you with the guidance to setup a cluster by providing a one-liner bash command to install and enroll nodes, which makes getting a cluster up-and-running really simple.

1

2

3

4

5

6

7

8

itadmin@kube01:~$ sudo kubectl get nodes

NAME STATUS ROLES AGE VERSION

kube01 Ready control-plane,etcd,master 124d v1.24.4+k3s1

kube02 Ready control-plane,etcd,master 124d v1.24.4+k3s1

kube03 Ready worker 124d v1.24.4+k3s1

kube04 Ready worker 124d v1.24.4+k3s1

kube05 Ready worker 124d v1.24.4+k3s1

itadmin@kube01:~$

Aww, yeah! Dashboards with Grafana!

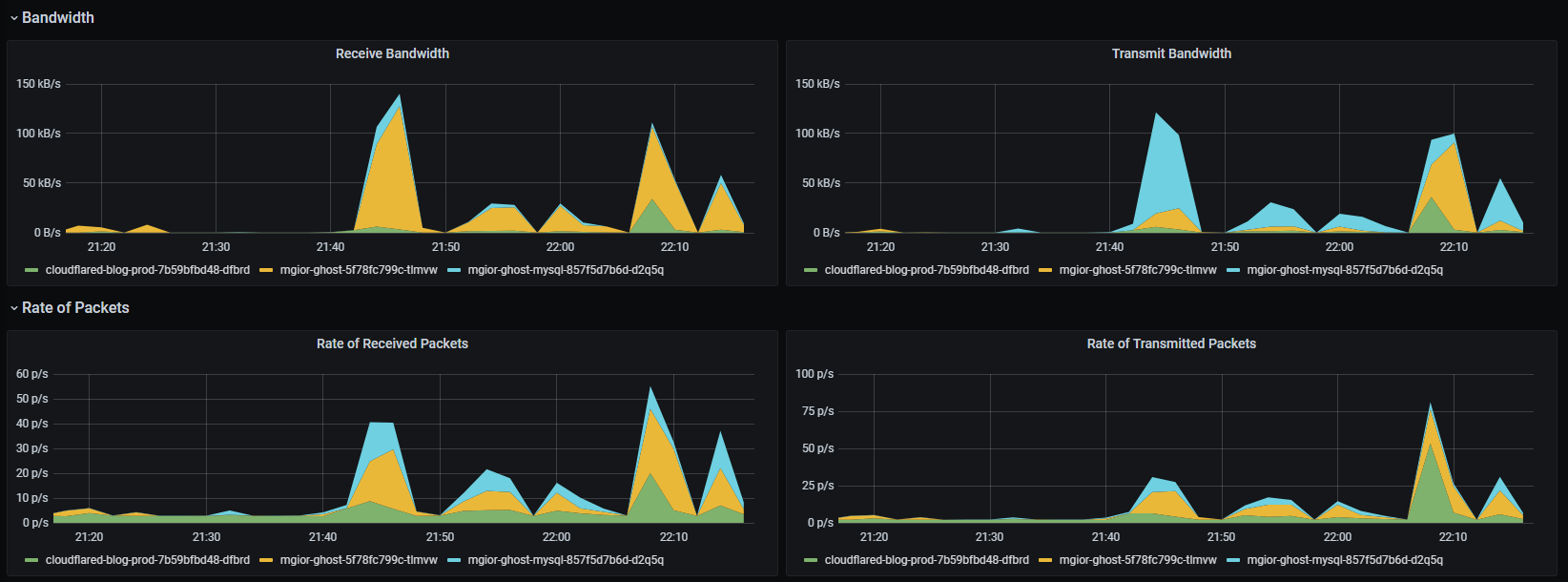

Within Rancher, you can deploy Apps via Helm Charts. One of those happens to be a nice Grafana + Prometheus monitoring stack! With Grafana, I can monitor different aspects of the cluster from nodes, to deployments, to pods.

Installing and getting running with Grafana, Alertmanager, and Prometheus is really simple with Rancher’s built-in “Apps” that come by way of Helm charts which effectively creates a single-click install for the stack. Along with that, you can use Helm Charts to install and deploy other components like MetalLB, WordPress, nginx, and more.

With the help of Alertmanager and Prometheus, I can monitor the entire K3s cluster for alarms, such as CPU usage, or crash loops from a failing deployment I forgot about - whoops.

In the spirit of logging, one of the things I’m trying to work towards is obtaining visibility into logs from Pods, such as the Ghost Blog Pod. What I would like to pull from this is essentially the HTTP request logs. I know there are ways to accomplish this with Grafana and some additional add-ons, but I haven’t found a clear tutorial or primer that can help me get that up and running. It’s something I’ll likely test in my virtualized “development” cluster so I don’t break anything in my production cluster.

Storage with Longhorn

I’m still new to the storage concept with Kubernetes, but I’m leveraging Longhorn via Rancher to handle the Persistent Volumes (PVs) and Persistent Volume Claims (PVCs) for my deployments. Longhorn provides a nice WebUI to navigate around backing up, restoring, attaching PVs and so on.

Additionally, I’m using Longhorn’s built-in backup mechanism to backup PVs to a private S3 bucket in AWS. This way, I can backup and restore from the AWS S3 bucket in the event my K3s cluster or Rancher VM dies. While this would assist in bringing back deployments, such as this blog, but not restoring the Control Plane, for example. This may not be the correct disaster recovery or backup strategy, but I’m still getting the hang of things - more to learn.

As of now, in a virtualized development cluster, I’ve destroy and recreated this blog and restored from backup several times to get my own DR plan in order. It works, but I still need to explore things outside of Longhorn to actually backup the components of my cluster.

Running Ghost Blog on K3s

I’ve referenced several tutorials I came across on Google and cobbled together what has worked for me and even refined it to work on the latest version of Ghost, which requires MySQL 8.

I published the manifest on GitHub for a basic Ghost + MySQL deployment so you can reference it here if you’re interested in hosting a Ghost blog yourself. There are some required changes noted in comments in the file, but also feel free to adjust values such as the namespace and labels - like I know what I’m talking about.

1

kubectl apply -f ghost-blog-k3s-template.yml

My Ghost deployment leverages the aforementioned manifest, but also employs the use of Cloudflare Tunnels through cloudflared in order to expose it to the internet.

Exposing Ghost via Cloudflare Tunnels

This assumes your DNS is managed by Cloudflare. If not, take a look and sign up for a free account!

- First, install cloudflared. In my case, I’ve installed it on a Linux box that has my Kube config file so I can setup the secrets later on the cluster.

- Next, federate your Cloudflare account with cloudflared by logging in.

1

cloudflared tunnel login

- After logging in, you can now create your first tunnel.

1

cloudflared tunnel create TUNNEL_NAME

- Next, create a CNAME entry for the Tunnel to point to. For example www.yourdomain.com

1

cloudflared tunnel route dns TUNNEL_NAME www.yourdomain.com

Now, for cloudflared to work within your deployment, you’ll need to create a secret in your cluster for the cloudflared Pod to read to authenticate your tunnel. Since you should have kubectl installed on the same machine (handy) you can create a secret right from the same machine!

1

kubectl create secret generic TUNNEL_SECRET_NAME --from-file=credentials.json=/home/USER/.cloudflared/TUNNEL_ID.json

The Tunnel ID is provided after performing the cloudflared tunnel create command from earlier, so you can use that as a reference.

Now you can setup your Cloudflared manifest to point your tunnel to your web service on your cluster. Obviously, change the namespace, domain name, secret name, etc.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cloudflared-blog-tunnel

namespace: ghost-blog

labels:

app: ghost-cloudflare-tunnel

spec:

selector:

matchLabels:

app: ghost-cloudflare-tunnel

replicas: 1

template:

metadata:

labels:

app: ghost-cloudflare-tunnel

spec:

containers:

- name: ghost-cloudflared

image: cloudflare/cloudflared:latest

args:

- tunnel

- --config

- /etc/cloudflared/config/config.yaml

- run

livenessProbe:

httpGet:

path: /ready

port: 2000

failureThreshold: 1

initialDelaySeconds: 10

periodSeconds: 10

volumeMounts:

- name: config

mountPath: /etc/cloudflared/config

readOnly: true

- name: creds

mountPath: /etc/cloudflared/creds

readOnly: true

volumes:

- name: creds

secret:

secretName: <TUNNEL_SECRET_NAME>

- name: config

configMap:

name: ghost-cloudflare-tunnel

items:

- key: config.yaml

path: config.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: ghost-cloudflare-tunnel

namespace: ghost-blog

labels:

app: ghost-cloudflare-tunnel

data:

config.yaml: |

tunnel: <TUNNEL_NAME>

credentials-file: /etc/cloudflared/creds/credentials.json

metrics: 0.0.0.0:2000

no-autoupdate: true

ingress:

- hostname: www.yoursite.com

service: http://<SERVICE_NAME>.<NAMESPACE>.svc.cluster.local:80

- hostname: yoursite.com

service: http://<SERVICE_NAME>.<NAMESPACE>.svc.cluster.local:80

- service: http_status:404

What’s Next?

I’ve been hosting two web applications in the cluster and exposing them via Cloudflare Tunnels which provides encryption and safely exposes the resources I need.

Next, I’ll need to venture more into the self-hosting aspect by leveraging technologies such as Traefik, Cert-Manager, and the like. I’m still getting my feet wet, so exposing services using Cloudflare Tunnels suffices for now and provides what I need.

I also need to gain more familiarity with PV/PVCs so I can properly restore my backups in Longhorn and reattach them to my, for example, Ghost blog deployment. I’ve tested in a K3s development cluster and while I receive warnings when the Pods attach to the restored PVCs, it works, but I’m probably missing something.